“Attention Is All You Need” introduces the Tranformers model

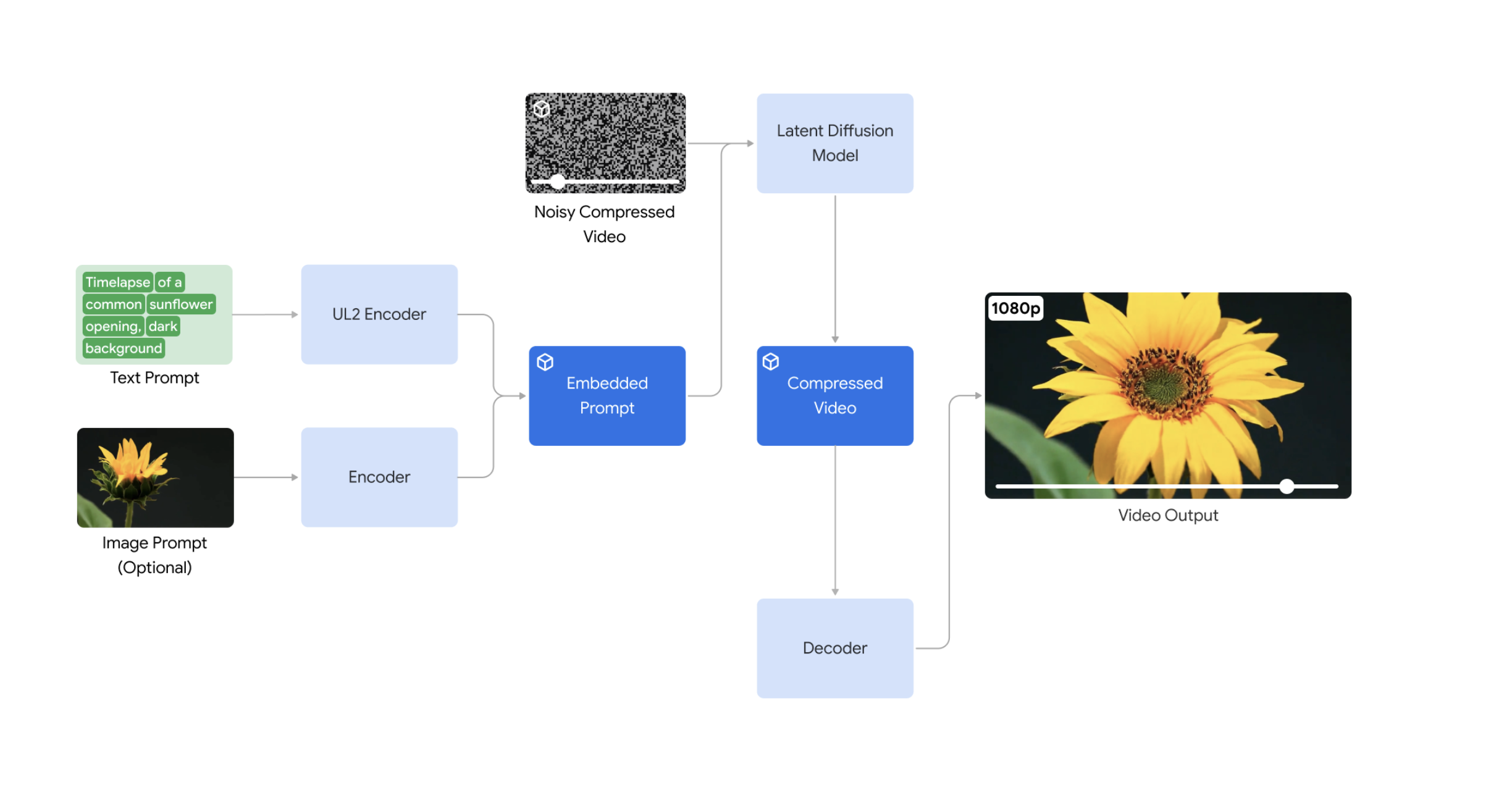

The Transformer model consists of an encoder-decoder structure, where both the encoder and decoder are built from layers of multi-head self-attention and feed-forward networks. This architecture enables the model to capture dependencies between words regardless of their distance in the sequence, leading to superior performance on tasks like language translation.

The conversation demonstrates that the Transformer outperforms previous state-of-the-art models in machine translation tasks, achieving higher BLEU scores with significantly less training time. The model’s architecture also generalizes well to other tasks, such as English constituency parsing, where it achieves competitive results.

The authors conclude that the Transformer sets a new standard in sequence modeling and opens up possibilities for applying attention mechanisms to other domains beyond text, such as images and audio.